Beyond all that had gone on with AIQ and Cambridge Analytica, a lot more has come out about Facebook’s practices, things that I always suspected they do, for why else would they collect data on you even after you opted out?

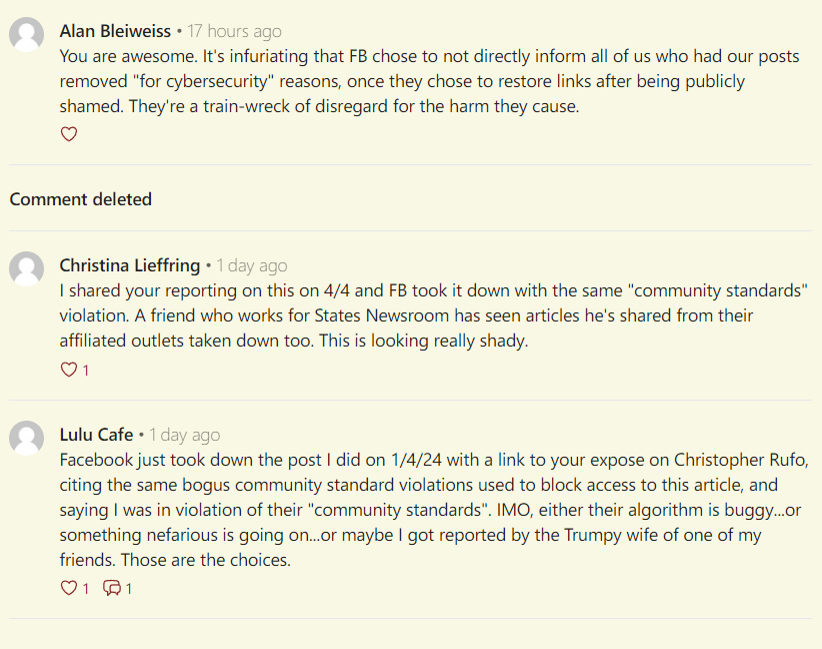

Now, Sam Biddle at The Intercept has written a piece that demonstrates that whatever Cambridge Analytica did, Facebook itself does far, far more, and not just to 87 million people, but all of its users (that’s either 2,000 million if you believe Facebook’s figures, or around half that if you believe my theories), using its FBLearner Flow program.

Biddle writes (link in original):

This isn’t Facebook showing you Chevy ads because you’ve been reading about Ford all week — old hat in the online marketing world — rather Facebook using facts of your life to predict that in the near future, you’re going to get sick of your car. Facebook’s name for this service: “loyalty prediction.”

Spiritually, Facebook’s artificial intelligence advertising has a lot in common with political consultancy Cambridge Analytica’s controversial “psychographic” profiling of voters, which uses mundane consumer demographics (what you’re interested in, where you live) to predict political action. But unlike Cambridge Analytica and its peers, who must content themselves with whatever data they can extract from Facebook’s public interfaces, Facebook is sitting on the motherlode, with unfettered access to staggering databases of behavior and preferences. A 2016 ProPublica report found some 29,000 different criteria for each individual Facebook user …

… Cambridge Analytica begins to resemble Facebook’s smaller, less ambitious sibling.

As I’ve said many times, I’ve no problem with Facebook making money, or even using AI for that matter, as long as it does so honestly, and I would hope that people would take as a given that we expect that it does so ethically. If a user (like me) has opted out of ad preferences because I took the time many years ago to check my settings, and return to the page regularly to make sure Facebook hasn’t altered them (as it often does), then I expect them to be respected (my investigations show that they aren’t). Sure, show me ads to pay the bills, but not ones that are tied to preferences that you collect that I gave you no permission to collect. As far as I know, the ad networks we work with respect these rules if readers had opted out at aboutads.info and the EU equivalent.

Regulating Facebook mightn’t be that bad an idea if there’s no punishment to these guys essentially breaking basic consumer laws (as I know them to be here) as well as the codes of conduct they sign up to with industry bodies in their country. As I said of Google in 2011: if the other 60-plus members of the Network Advertising Initiative can create cookies that respect the rules, why can’t Google? Here we are again, except the main player breaking the rules is Facebook, and the data they have on us is far more precise than some Google cookies.

Coming back to Biddle’s story, he sums up the company as a ‘data wholesaler, period.’ The 29,000 criteria per user claim is very easy to believe for those of us who have popped into Facebook ad preferences and found thousands of items collected about us, even after opting out. We also know that the Facebook data download shows an entirely different set of preferences, which means either the ad preference page is lying or the download is lying. In either case, those preferences are being used, manipulated and sold.

Transparency can help Facebook through this crisis, yet all we saw from CEO Mark Zuckerberg was more obfuscation and feigned ignorance at the Senate and Congress. This exchange last week between Rep. Anna Eshoo of Palo Alto and Zuckerberg was a good example:

Eshoo: It was. Are you willing to change your business model in the interest of protecting individual privacy?

Zuckerberg: Congresswoman, we have made and are continuing to make changes to reduce the amount of data …

Eshoo: No, are you willing to change your business model in the interest of protecting individual privacy?

Zuckerberg: Congresswoman, I’m not sure what that means.

In other words, they want to preserve their business model and keep things exactly as they are, even if they are probably in violation of a 2011 US FTC decree.

The BBC World Service News had carried the hearings but, as far as I know, little made it on to the nightly TV here.

This is either down to the natural news cycle: when Christopher Wylie blew the whistle on Cambridge Analytica in The Observer, it was major news, and subsequent follow-ups haven’t piqued the news editors’ interest in the same way. Or, the media were only outraged as it connected to Trump and Brexit, and now that we know it’s exponentially more widespread, it doesn’t matter as much.

There’s still hope that the social network can be a force for good, if Zuckerberg and co. are actually sincere about it. If Facebook has this technology, why employ it for evil? That may sound a naïve question, but if you genuinely were there to better humankind (and not rate your female Harvard classmates on their looks) and you were sitting on a motherlode of user data, wouldn’t you ensure that the platform were used to create greater harmony between people rather than sow discord and spur murder? Wouldn’t you refrain from bragging that you have the ability to influence elections? The fact that Facebook doesn’t, and continues to see us as units to be milked in the matrix, should worry us a great deal more than an 87 million-user data breach.