PS.: As of 11.28 a.m. GMT, eight hours after the post below, Facebook has put its normal reporting options back.—JY

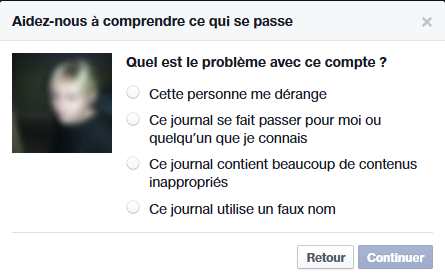

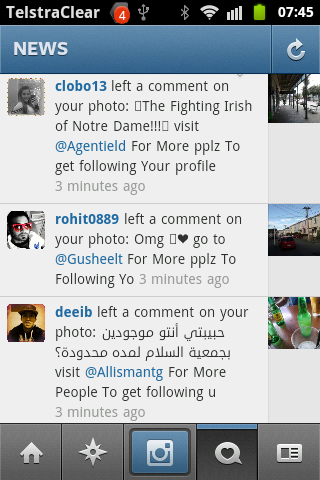

Facebook appears to be giving up on the bot fight. As of today, you can no longer mark an account as a fake one: the closest option is to say that it is using a false name (an entirely different reason, in my opinion).

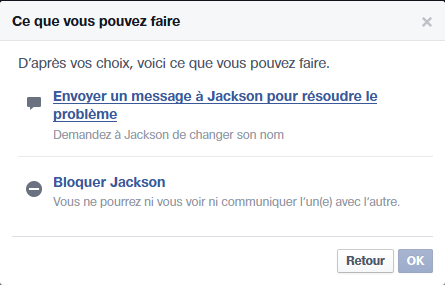

Facebook also now doesn’t want to hear from you if that is the case. You are expected to advise the bot (!) of your concern, or block it (which isn’t very helpful if you are running groups).

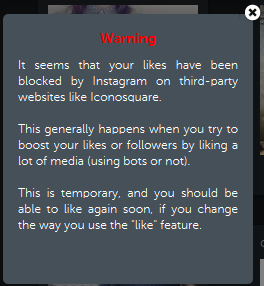

And I thought the 40-per-day limit was bad.

On Twitter, it was pointed out to me that I shouldn’t care, because Facebook is smart enough to figure out which are bots.

That’s actually not true: Facebook isn’t smart enough. Of the accounts I reported in the last month, Facebook allowed a handful to remain. When I urged them to look again, they then told me they had reconsidered and accepted that I was right. The accounts were then deleted.

Basically, without human intervention, Facebook actually has no way—unless, I imagine, it spots a bot net using the same IP address—of knowing where to start.

When you add this to the fact that Facebook uses click farms, it’s not a very rosy picture for anyone who needs to use the platform for marketing. You’re going to get fake likes and spam because of these bots and bot nets.

Yet Facebook gets a lot of things right. They remain responsive on legal issues, in particular. I’d like to see them get this part right.

Last year, the bot activity peaked in the last quarter of 2014, when I encountered my personal record of 277 per day. It’s been a several a day for most of 2015 but they are on the rise again.

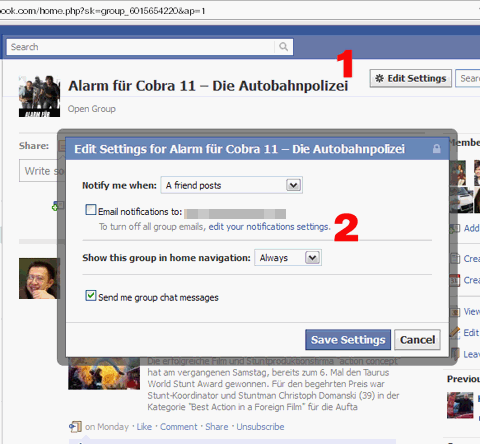

By reporting, hopefully the Facebook boffins can see the patterns of new bots (they do change their MOs from time to time) and guard against them for other users.

We could, of course, allow the bots to spam and impersonate people (the latter is also on the rise, just in the last week, when two of my friends became victims) and Facebook can deal to them ex post facto, which I accept is one way with not too many down sides.

Nevertheless, I think it’s irresponsible to ignore the bots, given that they affect all businesses. I also reckon we’re doing Facebook a favour by keeping its platform as clean as possible. If I were being selfish about it, I see real potential of them harming my own businesses with fake likes, undermining engagement. It’s part of modern life: if you work on Facebook, or any part of your business relies on Facebook, you should be concerned.

In 2009, I saw Vox go down, at a time when bots overran the system. I can’t make a causal link because I don’t have enough inside knowledge, but I do know that in my last year on that platform, I could not post (or, rather, I had to wait days for a compose window rather than milliseconds). Others were encountering some interesting bugs, too: one user had to switch browsers just to use it.

The symptoms are there: bots, fatigue over the platform, and now, a company that really can’t be arsed to hear from you when things are going south.

One computer expert has told me that the Facebook set-up is far more robust than Vox ever was, and I accept that, but at the same time I don’t believe the regular outages over the last 12 months are a coincidence.

Email is still surviving despite the fact that majority of messages are spam, so the “ignore them” camp has a point.

But you know me: if people ignored all the bad stuff going on, we wouldn’t have known Google was lying to people over Ads Preferences Manager and snooping on Iphones. And, on a wider scale on the internet, no one would have heard of Edward Snowden or Glenn Greenwald, or, locally, Nicky Hager, the man who a Labour politician called a conspiracy theorist, before a later National politician called a conspiracy theorist. I’d rather keep the pressure up, even if the matter is minor.

On that note, it’s time to work on a response to a proposed WCC traffic resolution. It might affect 14 households, but it’s still worth doing.

This is because Facebook doesn’t want to take the necessary actions required for hosting a social networking. All they are interested in is the adds which are posted on the site which produces a good amount of Facebook’s income.

They’re as unethical as the bots, Petifiles, and social preditors which hound Facebook’s network to target their victims. I believe the creation of a social media sites such as Facebook and the unmistakable neglection to monitor and rid the network of such behavior should inevitably fall under the accessory of crimes which take place on the network.

Put it this way the creator of Facebook created an environment which despicable elements can patrol and prey on the unsuspecting or nieve. An environment of this nature should have standards which the creator or CEO of the network has to abide by in order to keep the network open. In the event which the CEO turns a blind eye and allows such travesties to take place should be held responsible for the crimes committed on the social network, the crimes take place on. While the CEO of a network cannot be directly responsible for the crimes they should, however, be accountable for creating the accessory used to commit such crimes. Therefore the neglection to monitor and/or take the steps necessary to work towards a safer Social network should be held as an accessory to the crimes committed do to unmistakable neglect to govern such an “environment”. (Accessory) The Environment is the Accessory.