Steven J. Vaughan-Nichols has one of the clearest stories explaining ‘AI’, the misnomer used to describe the likes of Bing AI and ChatGPT (which, I understand, is French: Chat, j’ai pété translates to ‘Cat, I farted’).

Vaughan-Nichols explains that LLMs (large language models) simply rely on statistics, which is why they get things factually wrong. There aren’t agenda here: it’s how they’re designed. His links are given here:

Nope, ChatGPT and its ilk are nothing like that. A.I. engines are just very advanced, auto-complete, fill-in-the-blank machines. Their answers come from what words will most likely be the next in response to any given query. Note, I didn’t say it’s the accurate or right word — just the one that’s most likely, statistically speaking, to pop out of their large language model (LLM).

And he would know. He reports:

You can’t even trust these A.I. engines when you feed them the correct information. My favorite example: during a demo, Bing AI was fed a Q3 2022 financial Gap Clothing financial report and got much of it dead wrong.

It is basically a jumble of words, showing you the ones that are statistically likely given your query. It can’t discern fact from fiction, and, Vaughan-Nichols thinks we are years away from them replacing humans. Some companies will try, of course, as they attempt to shift more wealth upwards, if they believe they can get away with it.

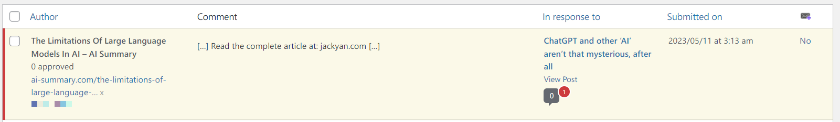

One thought on “ChatGPT and other ‘AI’ aren’t that mysterious, after all”